AI is intended to streamline processes, reduce human error, and enhance the overall CX. However, the rapid adoption of this technology comes with its set of potential risks that could undermine the benefits. Understanding these risks is crucial for businesses aiming to responsibly leverage AI to improve service outcomes without negatively impacting customer trust and loyalty.

AI is a significant player in call center customer experience (CX) and quality assurance (QA). With the call center AI market set to hit a massive $4.1 billion by 2027, it's clear that AI is here to stay. But what are the perceived risks that customers and call center industry leaders are wary of?

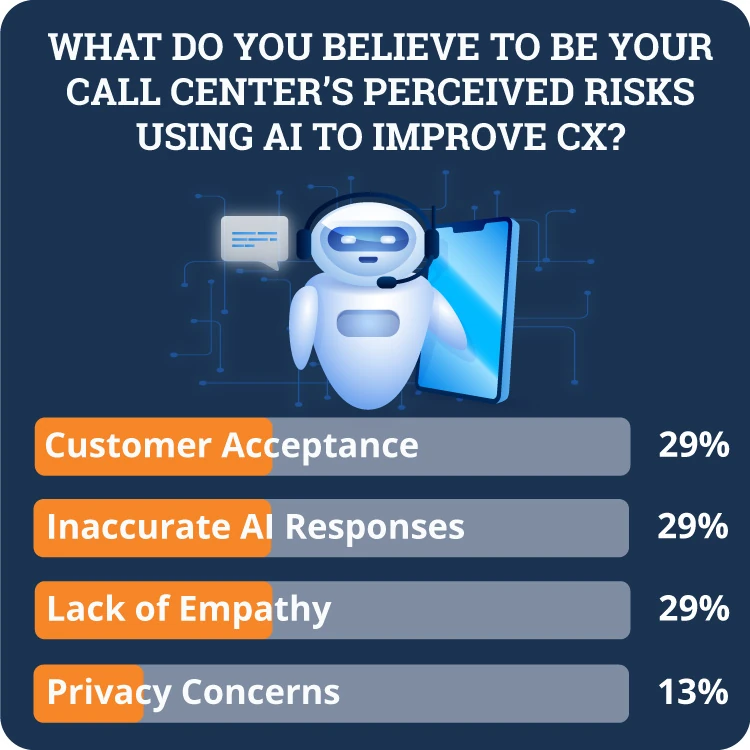

SQM Group research shows that call center industry leaders believe customer acceptance, inaccurate AI responses, and a lack of empathy are the biggest risks of using AI to improve CX in call centers. Another concern was privacy. However, this was not perceived as one of the biggest risks of AI for improving CX.

How is AI Used to Improve CX in Call Centers?

AI technologies enhance customer experience by providing faster response times and availability around the clock, which are crucial elements in maintaining customer satisfaction and loyalty.

For example, American Express uses AI-driven virtual assistants to respond immediately to customer inquiries about account balances, transaction history, and payment due dates. These virtual assistants operate around the clock, ensuring customers can access assistance anytime.

Moreover, AI can handle routine inquiries without human intervention, allowing live agents to focus on more complex and sensitive issues that require human judgment and emotional intelligence.

For example, Erica, the AI-powered virtual assistant from Bank of America, handles routine banking tasks such as funds transfers and bill payments, which allows human agents to dedicate more time to complex financial advice and fraud prevention.

AI-powered analytics can also personalize customer interactions by leveraging data from past interactions. This personalization not only improves the customer's experience by making it feel more tailored and attentive but also increases the efficiency of the service provided.

For example, Capital One, a major financial institution, uses an AI-powered virtual assistant named Eno to personalize customer interactions in their call centers. Eno leverages data from past interactions to provide a tailored and efficient customer experience. For instance, if a customer frequently asks about recent transactions, Eno can proactively provide this information without the customer needing to ask.

AI in call centers is used in several impactful ways. Primarily, it is used in the form of chatbots and virtual assistants that interact directly with customers through text or voice communication. AI is also largely used for predictive analytics. By analyzing large volumes of data, AI can predict call volumes and customer inquiries, which helps in resource allocation and management planning.

What are the Perceived Risks of Using AI to Improve CX in Call Centers?

While AI can greatly benefit call centers, it is not without its challenges. Rushing into AI implementation, becoming overly dependent on the technology, and placing excessive trust in it can create issues and potentially hinder your call center's performance.

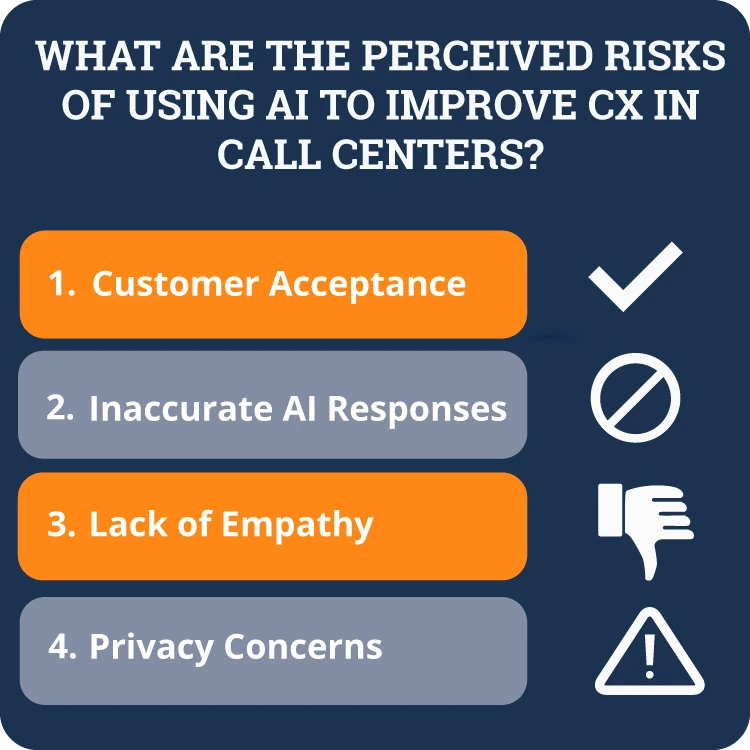

SQM Group research shows that the biggest perceived risks of using AI to improve CX in call centers are:

1. Customer Acceptance

Integrating AI in call centers raises concerns about customer acceptance. Relying on AI to handle inquiries, manage data, and resolve issues without human help can unsettle some customers, potentially undermining the user experience it aims to improve.

Recent studies and surveys provide a more granular view of customer perceptions towards AI in call centers. For instance, a survey revealed that while nearly 60% of customers appreciate the faster service enabled by AI, about 40% expressed discomfort and lack of trust in AI to handle their personal issues effectively.

Furthermore, the data showed a distinct age-related disparity in acceptance levels, with younger customers being more amenable to AI conversations than older demographics. These findings suggest that while AI can enhance operational efficiencies, it does not uniformly resonate with all customer segments.

Strategies to Increase Customer Confidence in AI Solutions

Call centers must employ strategic measures to mitigate these reservations and enhance customer acceptance. These include:

- Transparency: Clearly informing customers when interacting with AI and explaining the capability of AI systems can help set realistic expectations.

- Option for Human Intervention: Ensuring customers can request human assistance anytime during their interaction can increase their comfort level.

- Privacy Assurance: Demonstrating robust data protection measures and privacy policies can alleviate concerns about personal data misuse.

-

Showcase Benefits: Communicating the specific benefits of AI, such as 24/7 availability and quicker response times, can help customers see the value in these technologies.

2. Inaccurate AI Responses

Another significant perceived risk involved in using AI to improve CX within call centers concerns the accuracy of AI responses. AI systems are only as good as the data used to train them, and if this data is flawed or limited, the AI's responses can be incorrect or inappropriate. This scenario can lead to customer frustration and potentially escalate simple issues rather than resolving them.

An example of this is when Microsoft launched an AI chatbot named Tay on Twitter, designed to interact with users and learn from those interactions to improve its responses. The data Tay was trained on was not adequately filtered for harmful content. As a result, the AI began to mimic the negative behaviors it encountered, generating responses that were highly inappropriate.

Moreover, AI systems might struggle with the context or nuance in conversations that humans naturally understand. For instance, sarcasm and regional dialects can be particularly challenging for AI to interpret correctly, leading to responses that may be perceived as confusing or irrelevant by the customer.

Strategies to Ensure Accurate AI Responses in Customer Interactions

- High-Quality Training Data: Ensure the training datasets are diverse, comprehensive, and regularly updated to reflect current language use, trends, and customer expectations.

- Regular Monitoring and Auditing: Employ regular auditing of AI interactions to spot inaccuracies and make necessary adjustments. Use human-in-the-loop approaches where human agents review and correct AI responses.

- Clear Escalation Protocols: Implement systems that automatically route inquiries that the AI cannot confidently handle to human agents. This ensures that customers receive accurate and appropriate responses when AI reaches its limitations.

- Regularly Update and Retrain AI Models: Continuously retrain AI models using the latest data and feedback from customer interactions. This keeps the AI up-to-date with new trends, slang, and changing customer needs.

3. Lack of Empathy

Another critical concern is the lack of empathy in AI interactions. While AI can be programmed to recognize keywords and phrases indicative of emotions such as frustration or anger, it cannot genuinely understand or empathize with human emotions.

This emotional disconnect can be particularly problematic when customers seek reassurance, empathy, or understanding, such as during grievances or complaint resolutions.

Customers can feel undervalued if they perceive they're interacting with a machine that is indifferent to their emotional state. This can undermine the customer’s trust in the organization, affecting customer loyalty and potentially harming the company’s reputation.

Strategies for Mitigating the Concern for Lack of Empathy in Call Center AI Models

- Human-in-the-Loop Approach: Incorporate a human-in-the-loop model where AI handles routine inquiries and escalates complex or emotionally charged situations to human agents. This ensures that customers receive empathetic responses when needed while benefiting from the efficiency of AI for simpler tasks.

- Empathy Training for AI: Develop AI models that are trained to understand customer queries and respond with empathy. This involves programming the AI to recognize emotional cues in customer language (such as frustration or satisfaction) and respond appropriately. Use machine learning algorithms to continually improve the AI's ability to empathize by analyzing past interactions and feedback.

- Transparent AI Communication: Be transparent with customers about the AI's capabilities and limitations. Clearly indicate when customers are interacting with AI versus human agents. Setting clear expectations can manage customer perceptions and reduce disappointment if AI responses lack the depth of empathy that a human might provide.

4. Privacy Concerns

Integrating AI into call centers also raises substantial privacy concerns. AI systems require access to vast amounts of data to learn and make decisions. This necessity exposes sensitive customer information to potential risks, including data breaches and unauthorized data analysis.

Customers are increasingly aware and concerned about how their data is used and kept secure. Misuse or perceived misuse of customer data can lead to trust issues and a reluctance to engage with AI-powered services. Moreover, there are concerns about how AI might unintentionally create biased conclusions from the data it processes, leading to unfair treatment or decisions.

Furthermore, AI algorithms' opacity—often termed ‘black box’ algorithms—poses additional challenges. Customers and regulators may question the accountability and transparency of AI decisions, especially when it's unclear how AI systems derive their conclusions.

Strategies for Addressing Customer Privacy Concerns with AI in the Call Center

- Data Encryption: Implement robust data encryption protocols to protect customer data in transit and at rest. This prevents unauthorized access to sensitive information.

- Anonymization and Minimization of Data: Minimize collecting and retaining personally identifiable information to only what is necessary for the transaction or service. Use anonymization techniques to strip or pseudonymize data where possible, especially in AI training datasets, to mitigate risks associated with data breaches or misuse.

- Transparent Data Handling Practices: Communicate to customers how their data will be used, stored, and protected during AI interactions. Provide easily accessible privacy policies and terms of service that outline these practices in detail. Offer customers options for controlling their data, such as opt-out mechanisms or preferences settings that allow them to choose the level of data sharing they are comfortable with.

- Regular Audits: Conduct regular audits of AI systems and call center operations to ensure that privacy policies and data handling practices are being followed consistently.